I have been struggling recently with where to direct my focus and what I could write about that would add something material to the ongoing debates on “AI”, technology, and politics. Thanks to my friend Randy Bias for this post that inspired me to follow up:

This post triggered a few thoughts I’ve been having on the subject. Namely, that open source was born at a time that coincided with the apex of neoliberal thought, corresponding with free trade, borderless communication and collaboration, and other naive ideologies stemming from the old adage “information wants to be free”. Open source, along with its immediate forbear free software, carried with it a techno-libertarian streak that proliferated throughout the movement. Within the open source umbrella, there was a wide array of diverse factions: the original free software political movement, libertarian entrepreneurs and investors, anarcho-capitalists, political liberals and progressives, and a hodgepodge of many others who came around to see the value of faster collaboration enabled by the internet. There was significant overlap amongst the factions, and the coalition held while each shared mutual goals.

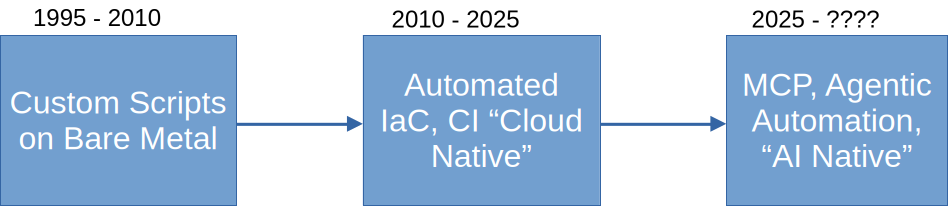

From 1998, when the term “open source” was coined, until the early 2010’s, this coalition held strong, accomplishing much with robust collaboration between large tech companies, startup entrepreneurs, investors, independent developers, general purpose computer owners, and non-profit software foundations. This was the time when organizations like the Linux Foundation, the Apache Software Foundation, and the Eclipse Foundation, found their footing and began organizing increasingly larger swaths of the industry around open source communities. The coalition started to fray in the early 2010s for a number of reasons, including the rise of cloud computing and smart phones, and the overall decline of free trade as a guiding principle shared by most mainstream political factions.

Open source grew in importance along with the world wide web, which was the other grand manifestation of the apex of neoliberal thought and the free trade era. These co-evolving movements, open source and the advocacy for the world wide web, were fueled by the belief, now debunked, that giving groups of people unfettered access to each other would result in a more educated public, greater understanding between groups, and a decline in conflicts and perhaps even war. The nation state, some thought, was starting to outlive its purpose and would soon slide into the dustbin of history. (side note: you have not lived until an open source community member unironically labels you a “statist”)

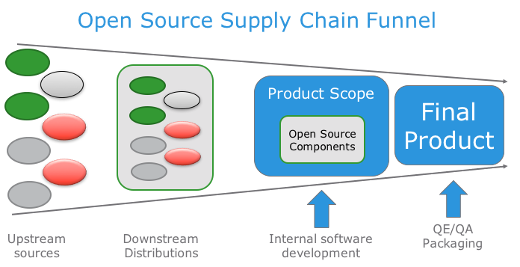

For a long time, open source participants happily continued down the path of borderless collaboration, falsely believing that the political earthquake that started in the mid-2010s woud somehow leave them untouched. This naivety ignored several simultaneous trends that spelled the end of an era: Russian influence peddling; brexit; the election of Trump; Chinese censorship, surveillance and state-sponsored hacking; and a global resurgence of illiberal, authoritarian governments. But even if one could ignore all of those geopolitical trends and movements, the technology industry alone should have signaled the end of an era. The proliferation of cryptocurrency, the growth of “AI”, and the use of open source tools to build data exploitation schemes should have been obvious clues that the geopolitical world was crashing our party. This blithe ignorance came to a screeching halt when a Microsoft employee discovered that state-sponsored hackers had infiltrated an open source project, XZ utils, installing a targeted backdoor 3 years after assumgin the ownership of a project.

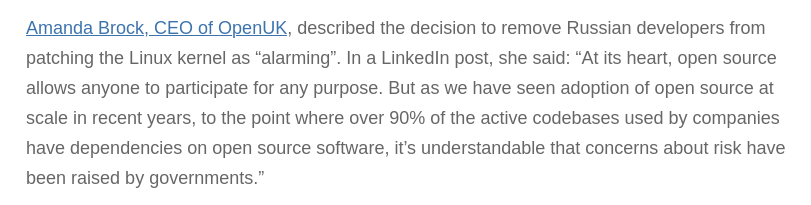

One cannot overstate the impact of this event. For the first time, we had to actively monitor the threats from nation states wanting to exploit our open source communities to achieve geopolitical goals. The reactions were varied. After some time, the Linux Foundation finally admitted that it could no longer ignore the origins of its contributors, demoting the status of some Russian contributors. At the other end of the spectrum is Amanda Brock, who prefers to stay ensconced in her neoliberal bubble, unperturbed by the realities of our modern political landscape.

One thing must be clear by now: we find ourselves knee-deep in a global conflict with fascist regimes who are united in their attempts to undermine free republics and democracies. As we speak, these regimes are looking to use open source communities and projects to accomplish their aims. They’ve done it with blockchains and cryptocurrencies. They’ve done it with malware. They’ve done it with the erosion of privacy and the unholy alliance of surveillance capitalism and state-sponsored surveillance. And they’re continuing to do it with the growth of the TESCREAL movement and the implementation of bias and bigotry through the mass adoption of AI tools. This is part and parcel of a plan to upend free thought and subjugate millions of people through the implementation of a techno oligarchy. I don’t doubt the utility of many of these tools — I myself use some of them. But I also cannot ignore how these data sets and tools have become beachheads for the world’s worst people. When Meta, Google, Microsoft or other large tech companies announce their support of fascism and simultaneously release new AI models that don’t disclose their data sets or data origins, we cannot know for sure what biases have been embedded. The only way we could know for sure is if we could inspect the raw data sources themselves, as well as the training scripts that were run on those data sets. The fact that we don’t have that information for any of these popular AI models means that we find ourselves vulnerable to the aims of global conglomerates and the governments they are working in tandem with. This is not where we want to be.

From where I stand, the way forward is clear: we must demand complete transparency of all data sources we use. We must demand complete transparency in how the models were trained on this data. To that end, I have been disappointed by almost every organization responsible for governing open source and AI ecosystems, from the Linux Foundation to the Open Source Initiative. None of them seem to truly understand the moment we are in, and none of them seem to be prepared for the consequences of inaction. While I do applaud the Linux Foundation’s application of scrutiny to core committers to its projects, they do seem to have missed the boat on the global fascist movement that threatens our very existence.

We have to demand that the organizations that represent us do better. We must demand that they recognize and meet the moment, because so far they have not.