I conceived of this blog in 2023 as a skeptic’s outpost, demystifying AI hype and taking it down whenever necessary. I had no interest in fueling a speculative bubble, but as a technologist at heart, I’m always interested in seeing what’s coming down the road. This is my way of saying that this post is not about poking holes in the current hype cycle, but rather taking a look at what I see developing in the application development world. Because I see major changes afoot, and I’m not sure folks are ready for it.

No, we’re not all losing our jobs

This is one of those zombie lies that won’t die. Turns out, sprinkling AI sauce into your IT and build environments makes humans in the loop even more important than before. Humans who understand how to build things; humans who understand how to communicate; humans who know how to write effectively; and humans who can conceive of a bigger picture and break it down into lanes of delivery. In other words, the big winners in an AI world are full-stack humans (I stole this from Michelle Yi. I hope she doesn’t mind) – a career in tech has never been more accessible to a humanities degree holder than now.

The big losers are the code monkeys who crank out indecipherable code and respond in monosyllabic grunts to anyone who deigns to ask – those “10x” engineers that everyone was lauding just 3 years ago. We already knew source code wasn’t that valuable, and now it’s even less valuable than ever.

AI and DevOps

I had thought, until recently, that as AI infiltrated more and more application development, that the day would come when developers would need to integrate their model development into standard tools and platforms commonplace in our devops environments. Eventually, all AI development would succumb to the established platforms and tools that we’ve grown to know and love that make up our build, test, release, and monitor application lifecycle. I assumed there would be a great convergence. I still believe that, but I think I had the direction wrong. AI isn’t getting shoehorned into DevOps, it’s DevOps that is being shoehorned into AI. The tools we use today for infrastructure as code, continuous integration, testing, and releasing are not going to suddenly gain relevance in the AI developers world. A new class of AI-native tools are going to grow and obliterate (mostly) the tools that came before. These tools will both use trained models to be better at the build-test-release application development lifecycle as well as deploy apps that use models and agents as central features. It will be models all the way down, from the applications that are developed to the infrastructure that will be used to deploy, monitor, and improve it.

Ask yourself a question: why do I need a human to write Terraform modules? They’re just rule sets with logic that defines guardrails for how infrastructure gets deployed and in what sequence. But let’s take that one step further: if I train my models and agents to interact with my deployment environments directly – K8s, EC2, et al – why do I need Terraform at all? Training a model to interact directly with the deployment environments will give it the means to master any number of rulesets for deployments. Same thing with CI tools. Training models to manage the build and release processes can proceed without the need for CI platforms. The model orchestrators will be the CI. A Langchain-based product is a lot better at this than Circle CI or Jenkins. The eye-opener for me has been the rise of standards like MCP, A2A, and the like. Now that we are actively defining the interfaces between models, agents, and each other, it’s a short hop, skip, and a jump to AI-native composite apps that fill our clouds and data centers, combined with AI-native composite platforms that build, monitor, and tweak the infrastructure that hosts them.

AI Native Tools are Coming

Once you fully understand the potential power of model-based development and agent-based delivery and fulfillment, you begin to realize just how much of the IT and devops world is about to be flipped on its head. Model management platforms, model orchestrators, and the like become a lot more prominent in this world, and the winners in the new infrastructure arms race will be those tools that take the most advantage of these feature sets. Moreover, when you consider the general lifespan of platforms and the longevity of the current set of tools most prevalent in today’s infrastructure, you get the impression that the time for the next shift has begun. Hardly any of today’s most popular tools were in use prior to 2010.

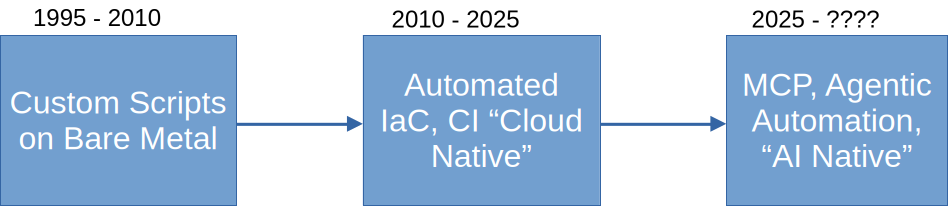

DevOps tools have followed a general pattern over the past 30 years, starting with the beginning of what I’ll call the “web era”:

The current crop of automation tools are now becoming “legacy” and their survival now rests on how well they acclimate to an AI Native application development world. Even what we call “MLOps” was mostly running the AI playbook in a CI “cloud native” DevOps world. Either MLOps platforms adapt and move to an AI native context, or they will be relegated to legacy status (my prediction). I don’t think we yet know what AI native tools will look like in 5 years, but if the speed of MCP adoption is any indicator, I think the transition will happen much more quickly than we anticipated. This is perhaps due to the possibility that well-designed agentic systems can be used to help architect these new AI Native systems.

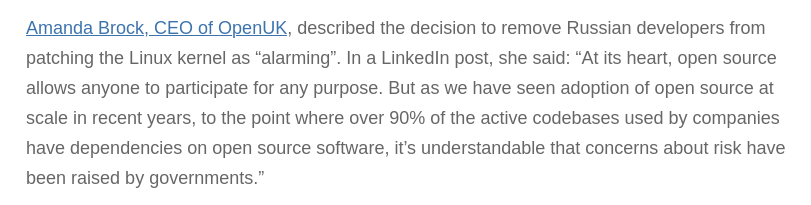

I also don’t think this will be any great glorious era of amazing transformation. Any migration to new systems will bring about a host of new challenges and obstacles, especially in the security space. I shudder to think of all the new threat vectors that are emerging as we speak to take advantage of these automated interfaces to core infrastructure. But that’s ok, we’ll design agentic security systems that will work 24/7 to thwart these threats! What could possibly go wrong??? And then there are all the other problems that have already been discussed by the founders of DAIR: bias, surveillance, deep fakes, the proliferation of misinformation, et al. We cannot count on AI Native systems to design inclusive human interfaces or prevent malicious ones. In fact, without proper human governance, AI native systems will accelerate and maximize these problems.

In part 2, I’ll examine the impact of AI Native on development ecosystems, open source, and our (already poor) systems of governance for technology.